How to Use AI for Research (as a Content Writer)

Using AI for research can either make your process faster or quietly wreck your credibility. This article breaks down how to get the best of both worlds — using AI to surface ideas, data, and debates while keeping your judgment (and your sources) intact. It’s a realistic look at what actually works for content writers in the trenches.

It’s a little ironic to find myself writing this article. See, the first time I tried using AI for research, it was…not exactly a success.

I was writing a very stat-heavy article on HR tech. My client is a stickler for research and correct attribution. But I wanted to play with a new toy, so I set myself up on that LLM that’s supposed to be great for research — and got to work.

At first, I was overjoyed. It saved me so much Googling! It pulled up recent studies! My brain made that “Hallelujah” chorus sound. I double-checked all the citations and shared the doc.

Sadly, my editor came back with a somewhat bemused comment. I’d cited stats from a so-called “predatory journal” — a low-quality, pay-to-publish journal that exploits authors.

Now, I am a serious research geek. I really care about this stuff. I still don’t know how that one sneaked past me.

Suffice it to say that I flounced off, swearing never to use AI for research again.

And yet. These days, my research process often includes a bit of mucking around with an LLM.

I’ve simply built new checks into the way I work.

And, even though I’m discarding much of the research ChatGPT does for me, it does also genuinely save me time.

There are countless prompt lists online, but I’ve pulled together a few that have really worked for me. Hopefully, they save you some time, too.

What do I mean by content research?

Here, I’m referring to the research that content writers do before drafting a new piece of content: gathering the ideas, data, and perspectives that shape a piece.

(I’m not including the research that content strategists do — market analysis, keyword research or the like. Mostly because I don’t want this article to be 20,000 words long.)

The goal of content writing research is to make the piece of content work better. To be clearer, more persuasive, and more trustworthy.

Content research will usually include some combination of:

- Conceptual research: Clarifying definitions, frameworks, and mental models

- Competitive research: Scanning what’s already out there to find gaps

- Audience voice research: Listening to customers in forums, reviews, and conversations

- Sourcing data: Pulling studies, reports, and stats to anchor your claims

- Finding experts: Interviewing or quoting authoritative voices in the field

And AI can be helpful for all of these — with a few guardrails in place.

How to use AI to understand the topic

Of course, to write a good piece, you need to know (at least broadly) what you’re talking about.

And, even if you’re in-house or writing in a space you know well, nobody knows absolutely everything about their given subject matter.

You don’t need to become an expert overnight. But you do need to know the frameworks, models, or mental shortcuts your audience already uses. What are the current debates? What’s obvious about the topic, and what’s not? Where is the nuance?

This used to see me spending hours reading industry publications and trying to mentally synthesize the front page of Google.

These days, I might just use a prompt like this one:

Act as a research assistant helping me prepare a content brief. I’m writing about [topic].

List the 5–7 most recent (past 24 months) primary sources (reports, surveys, studies, or datasets) relevant to this topic.

For each, include: publisher, year, methodology/sample size, key finding, and a direct URL to the original source (not a blog quoting it).

Summarize where these sources agree and where they contradict each other.

Flag any areas where evidence is weak, outdated, or mostly opinion.

Suggest at least 2 contrarian or under-explored angles I could use to differentiate my content.

Warning: You probably don’t need me to tell you this, but — AI is great at surfacing sources, but it’s also notorious for fabricating citations or linking to summaries instead of the original report. Always click through, check the publisher, and verify the data trail yourself. Think of the prompt as a way to save time finding leads, not a replacement for your own judgment.

Also, heads up that when we tested this for the purposes of this article, this prompt took up to 3 minutes to run.

Pro move: Once AI gives you a list of frameworks or debates, drop them into a two-column table: “consensus” vs. “contrarian/edge.” Then ask it to brainstorm audience objections to each side.

How to use AI to nail your audience voice

Sometimes, what you need to know is more about what your audience thinks about things. What questions do they have? What gets them ranting? What are the running debates about your topic?

Reddit can be a great resource for this — but who has time to read a billion threads?

This prompt can save some time:

Act as a research assistant. Search Reddit for active discussions where people are *arguing or disagreeing* about **[insert topic]**.

For each thread you find, provide:

1. Subreddit name

2. Thread title + link

3. Date of last activity (month/year)

4. Summary of the opposing viewpoints (2–3 sentences each)

5. A representative direct quote from each side (with username redacted)

6. Upvote/downvote counts (if available)

Only include threads from the last 18 months, and prioritize ones with 20+ comments. Do not paraphrase the direct quotes; use verbatim quotes.

Once you’ve verified the threads, you’ll have a solid understanding of the current mutterings about the topic. You might also be able to drop in a few quotes to back up your points (depending on your style guide).

Warning: Prompts like this are handy for surfacing debates, but, as always, AI has blind spots. It may pull older threads, overemphasize the loudest voices, or flatten the nuance of real arguments. Use it to find conversations — then read the threads yourself to catch context and quieter points that actually matter. Also, watch out for AI paraphrasing instead of quoting.

Pro move: After AI surfaces Reddit or forum threads, ask it to pull 10–20 of the raw quotes into a doc. Highlight the exact words and phrases people use — especially metaphors, slang, or shorthand. Then build a quick “voice bank” with three columns: common wording, tone/emotion, and how I could echo this in my content. This way, you’re not just guessing at audience voice — you’re literally lifting the language they use to describe their problems.

Here’s what it looks like in action (I promise it did, in fact, provide 7 sources):

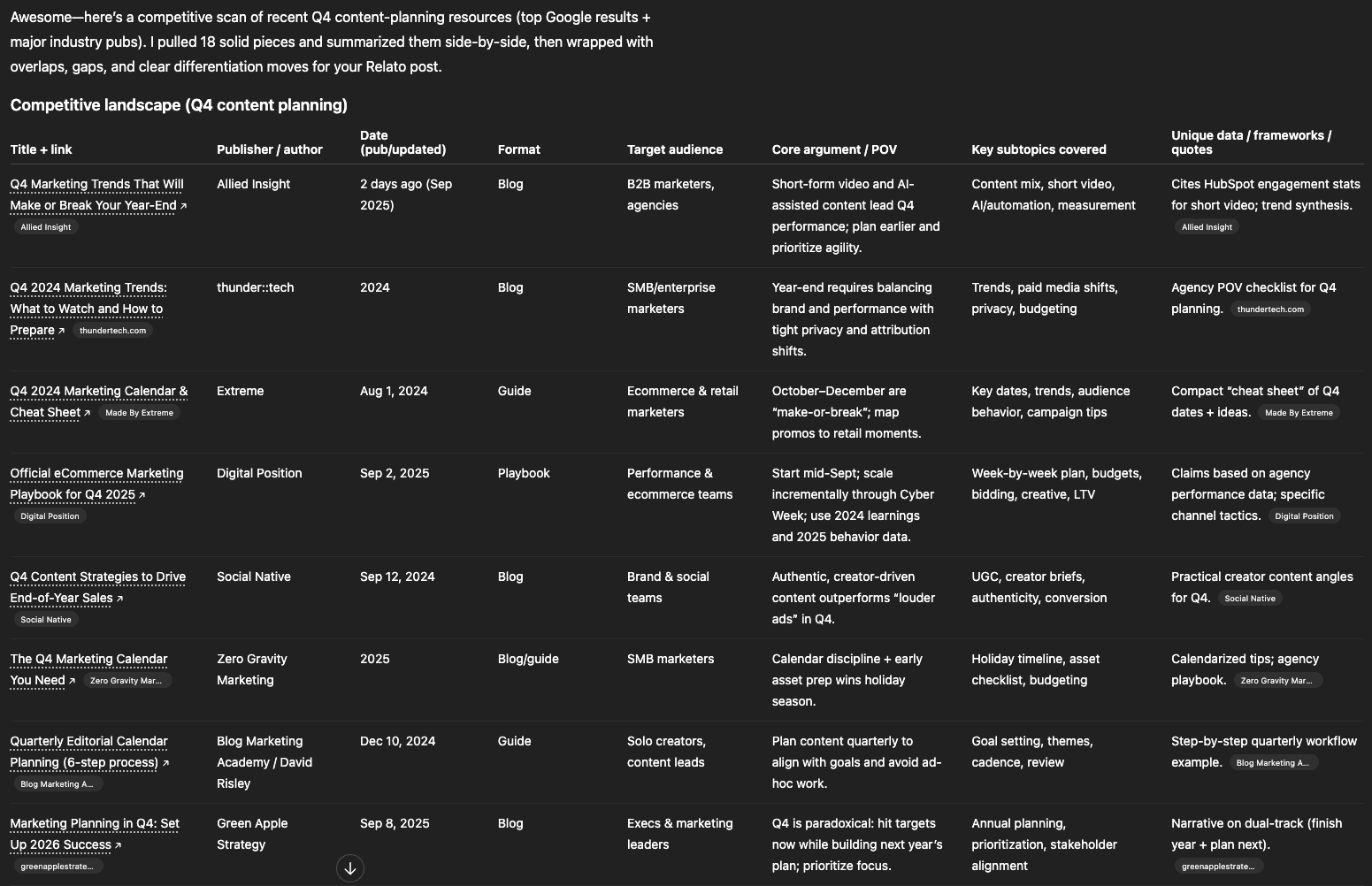

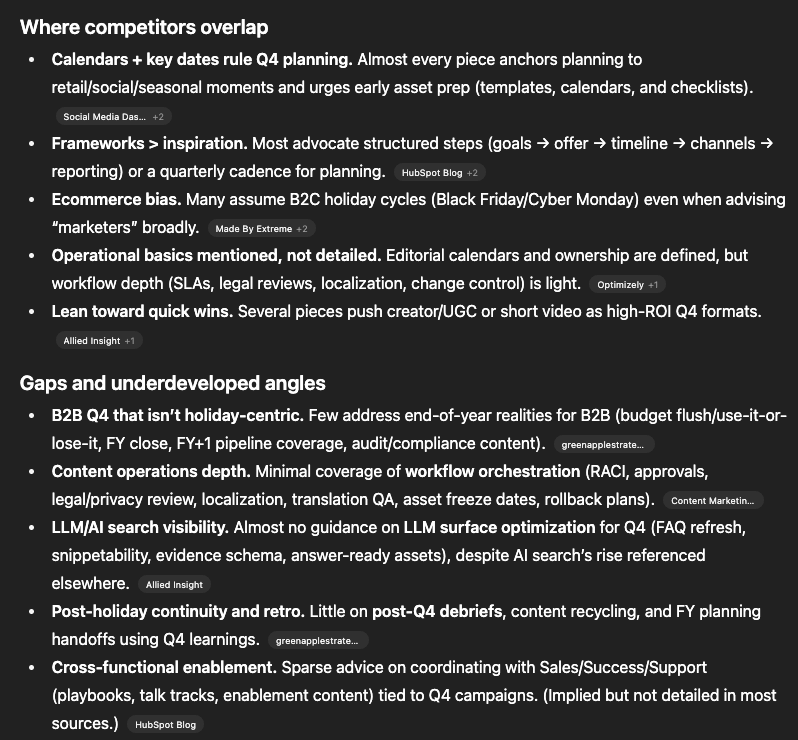

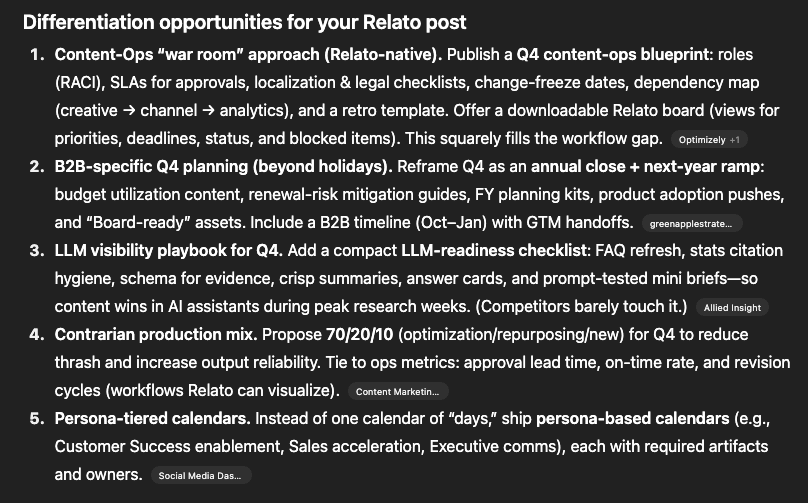

How to use AI to research your content competitors

Competitive content research shouldn’t be: scan the top-ranking posts, copy the subheads, and make sure nothing is missing. That guarantees you’ll blend in.

The real value is in spotting patterns. When you compare 10 or 15 pieces, you notice the arguments everyone leans on, the stats that have been recycled to death, and the use cases nobody’s addressing.

You’re pressure-testing the consensus and finding the edges where your content can push further.

But reading those 15 pieces adds the kind of time most writers can’t afford. AI can help:

Act as a competitive content analyst. I am preparing to write a blog post on [insert topic].

- Search across the top 15–20 Google results, plus major industry publications, for content that directly addresses this topic.

- For each piece you find, provide:

- Title + link

- Publisher/author

- Date published/last updated

- Format (blog, guide, report, video transcript, etc.)

- Target audience (based on tone + level of detail)

- Core argument or POV (1–2 sentences)

- Key subtopics covered

- Any unique data, frameworks, or expert quotes included

- Summarize the common overlaps (topics, arguments, or approaches most competitors share).

- Identify the gaps or underdeveloped angles (questions left unanswered, perspectives missing, audiences underserved, outdated or weak data).

- Suggest at least 3 differentiation opportunities for my upcoming post (e.g. contrarian angle, deeper analysis, adding primary data, targeting a different persona, or reframing the problem).

- Provide all findings in a structured table followed by a narrative summary.

- Suggest at least 3 differentiation opportunities for my upcoming post (e.g. contrarian angle, deeper analysis, adding primary data, targeting a different persona, or reframing the problem).

- Provide all findings in a structured table followed by a narrative summary.

Warning: AI often struggles with step 1 — it may hallucinate sources or paraphrase summaries of summaries. Always click through the links, verify they exist, and skim for accuracy before using the information.

Pro move: Don’t stop at gaps. Ask AI to score each competitor piece on Bland vs. Bold (use Erin Balsa’s framework: originality, novelty, proof, depth). It helps you see where your piece can stand out.

Here’s how that looks in real life (the actual table did have 20 results):

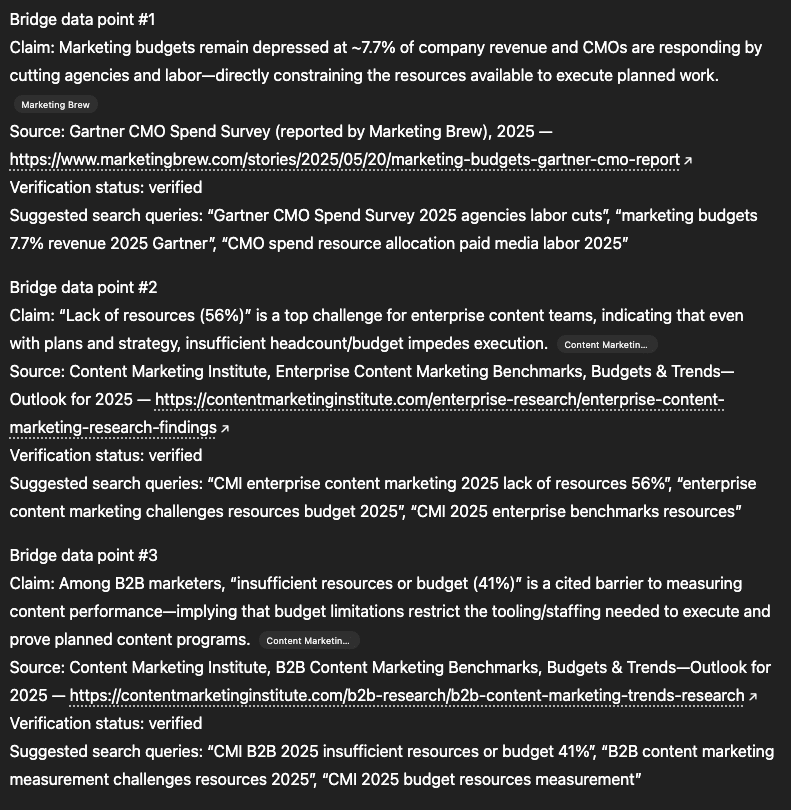

How to use AI to find supporting data and studies

This is where a lot of AI hallucinations can show up. I’ve wasted more hours than I’d like to admit arguing with ChatGPT about facts that it flat-out invented. (Wild that “debating robots” is now part of my job description, but here we are.)

Where I find AI shines is in surfacing the connective tissue between ideas. When you’re building a case, you’re usually stacking assumptions:

- Assumption A: Remote onboarding is harder for managers to personalize.

- Assumption B: Employees who don’t feel supported are more likely to churn.

To make that argument land, you need something in the middle — a data point that shows remote onboarding → less personalization → lower perception of support → higher churn.

On Google, finding that kind of “bridge” fact often means guessing keywords, opening a dozen PDFs, and hoping one of them connects the dots.

Because LLMs are trained to model relationships between concepts, not just keyword matches, they’re surprisingly good at suggesting the kinds of evidence that could sit between two.

The evidence isn’t always right — but it will often point you toward sources or angles you wouldn’t have thought to search for. Then use Google (or the source itself) to confirm the facts.

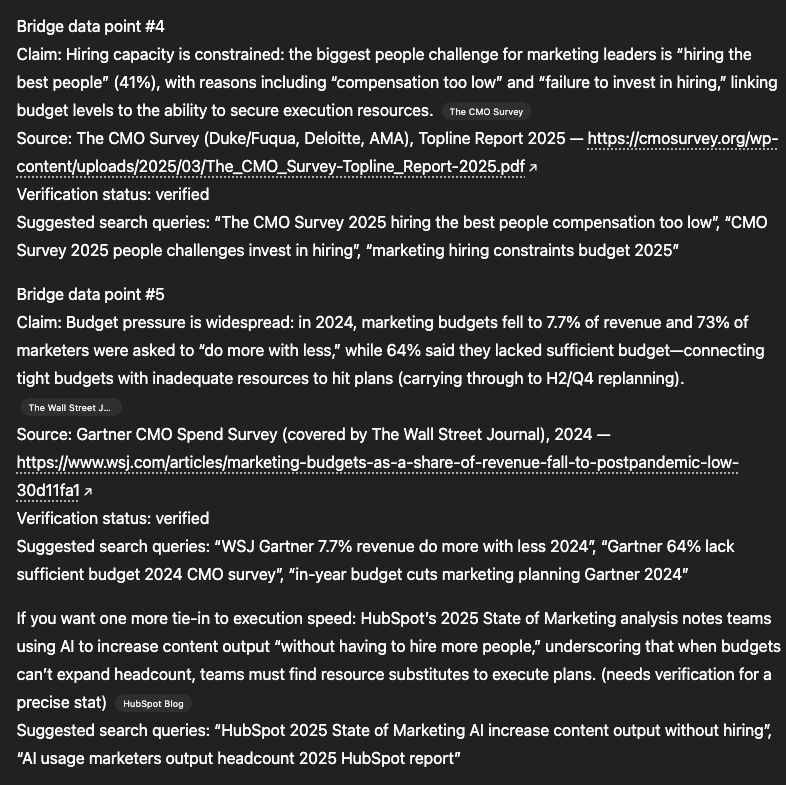

Here’s a prompt you could use:

Act as a research assistant. I’m building an argument that depends on two assumptions:

- Assumption A: [Insert first assumption here]

- Assumption B: [Insert second assumption here]

Your tasks:

- Identify potential data points, studies, or survey results that connect Assumption A to Assumption B.

- For each, provide:

- A one-sentence summary of the claim.

- The source (organization, author, or publication) and year.

- A direct link if available.

- If you’re uncertain about a stat or can’t find a real source, clearly label it as “needs verification.”

- Suggest 2–3 keywords or search queries I could use to manually verify this information via Google Scholar, industry reports, or news articles.

Output format:

- Bridge data point #1Claim: …Source: …Verification status: [verified / needs verification]Suggested search queries: …

Repeat for 3–5 possible bridge data points.

Pro move: When AI gives you a stat and a sketchy-looking source, don’t waste time arguing with it. Look at the keywords in its answer and copy those straight into Google Scholar or a trusted database. The shortcut isn’t always the stat itself. It’s the search terms.

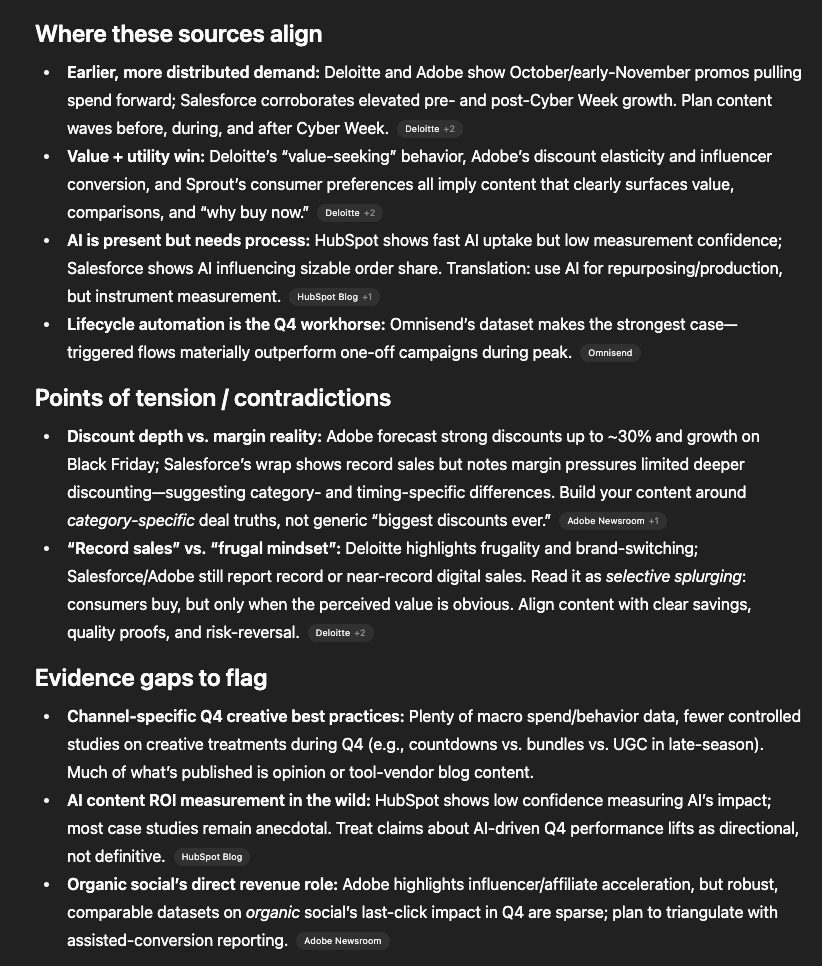

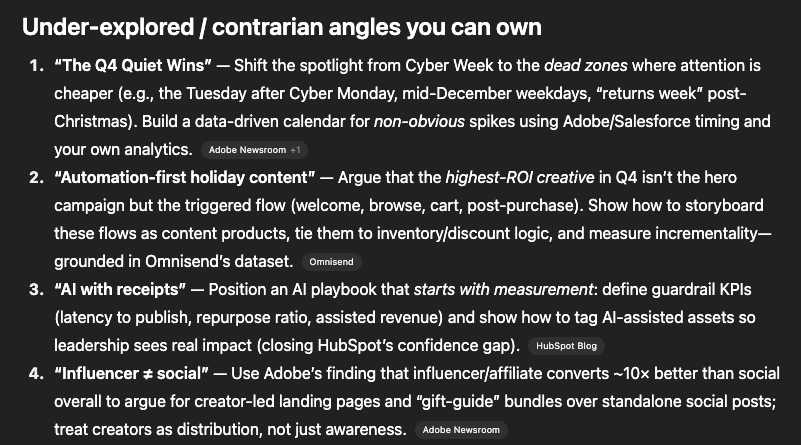

Here’s how the prompt delivered using our Q4 content planning example from earlier:

How to use AI to prep for SME interviews

When it comes to prepping for interviews, AI can be handy for making sure you’re not asking your expert to retread the same ground, especially if they’re already outspoken online.

Assuming you don’t have time to read everything your expert has ever said on the topic, here’s a prompt:

I’m preparing to interview [Expert Name] on the topic of [Topic]. Please do the following:

- Review publicly available content from this person — including LinkedIn posts, podcast appearances, interviews, and blog contributions.

- Summarize their actual stated views on [Topic] — highlight what they emphasize, how they frame it, and any specific use cases or examples they share.

- Identify areas where they explicitly disagree with common industry narratives or with other people — note how their perspective is different.

- Provide direct quotes or close paraphrases of what they’ve said, with links to the original content (and timestamps if from audio/video).

- Important: Do not invent, speculate, or hypothesize what they might think. Only include positions they have clearly stated in their own words.

Output format:

- View #1: [Summary]

- Source: [Link / timestamp]

- View #2: [Summary]

- Source: [Link / timestamp]

- Points of disagreement/differentiation: [List them clearly]

As always, don’t forget to fact check.

I also like it for helping me think of more questions. Especially when I only have a surface-level understanding of the topic, AI can help me figure out what I wouldn’t know to ask.

If you’re talking to someone who’s already spoken about a topic extensively, try this:

I’m preparing to interview [Expert Name] on [Topic]. Please generate thoughtful, conversational interview questions that go beyond the basics.

Your tasks:

- Review what this expert has recently said (LinkedIn, podcasts, interviews, blogs).

- Spot any gaps, controversies, or contradictions in their takes.

- Draft 7–10 questions that:

- Sound natural, like something I’d actually ask in a live conversation.

- Push them beyond their “default” talking points.

- Invite specific stories, examples, or data.

- Explore angles where their view differs from the mainstream.

- Surface a few unexpected or challenging audience-style questions — the kind of curveballs I might not think of, but that a curious audience member might ask.

- For each question, briefly note which past statement or theme it builds on (with a source link if possible).

Output format:

- Question: [Write the question in a casual, conversational style]

- Based on: [Link or reference to past statement]

Or, if you’re looking to get to the lived experience of an internal SME (e.g. not someone who’s already published a lot on the topic), then you could try something like this:

I’m preparing to interview [SME name/role] on [Topic]. They haven’t published much on this, but they have hands-on experience that’s valuable for content. Please help me generate insightful, conversational questions.

Your tasks:

- Assume this SME has practical, lived experience but not polished talking points.

- Draft 7–10 questions that:

- Sound natural, like a curious colleague asking.

- Pull out stories, “how we actually do this” examples, or step-by-step explanations.

- Explore pain points, lessons learned, and trade-offs they’ve seen in practice.

- Surface what they wish outsiders understood about their work.

- Include a couple of unexpected, non-obvious questions — things I might not think of myself, but that an engaged audience or curious outsider would likely ask.

- For each question, explain briefly why it’s valuable for turning their expertise into content.

Output format:

- Question: [Write the question in a casual, conversational style]

- Why it’s valuable: [Note]

**Sorry, no real-time example of this one, as the results would be super-specific to your SME. Same with the last prompt below. But you get the gist.

And finally, if you can’t get hold of the expert you’d really love to talk to, you can always just role-play with it to strengthen your argument:

"Here's a summary of my thesis [Add in the argument you're currently making in your piece].

Pretend you're [industry expert] and pull it apart. Show me where I'm wrong, where I'm oversimplifying, and what I'm missing."

Note: I don’t find AI helpful for identifying the right people to talk to. I tried it out for the purposes of this article, and it didn’t pull any of the people I’d consider industry experts on my given topic — simply the authors of the top-performing blog posts on Google. It also invented a bunch of people and advised me to talk to people who had made derivative carousels on LinkedIn, rather than true experts.

Despite it all, AI really can save you research time

If you treat AI as a source of truth, it’ll hand you bad stats, misattributed quotes, and made-up journals.

But if you treat it as a research assistant — one that’s fast, creative, but a little sloppy — it can make your process quicker at every stage. It can surface debates you wouldn’t have seen, wording your audience actually uses, gaps in the competitive landscape, bridge facts for your arguments, and sharper questions for your experts.

The real difference comes down to your workflow. Without a system, research stays ad hoc: every writer reinventing the wheel, every editor scrambling to fact-check at the end. With a system, research gets built into the workflow from the start.

That’s what Relato is for. It’s a content ops tool that lets you bake source checks, prompt templates, and verification steps into your briefs and processes. So your team can move faster and publish with confidence.

AI won’t replace critical thinking. But with the right ops in place, you’ll scale content without scaling errors — and always have the receipts to back it up.

Relato is for curious creators.

Get sharp, practical insights on AI, research, and content workflows delivered straight to your inbox.